Quantum Machine Learning 1.0

A big future for small devices

Quantum machine learning is a new buzzword in quantum computing. This emerging field asks — amongst other things — how we can use quantum computers for intelligent data analysis. At Xanadu we are very excited about quantum machine learning and spend a fair amount of time thinking about it. Here is why.

First of all, it is important to note that quantum machine learning is very young, meaning that it is not yet clear what results, and commercial applications, to expect from it. This was demonstrated at the “Quantum meets Industry” panel at the quantum machine learning conference in Bilbao, Spain. When asked whether the time is ripe for commercial investments into quantum machine learning, the experts from companies such as IBM, Microsoft and NASA, were noticeably careful with their answers. Still, almost every company involved in quantum computing today, including the representatives in the panel, has a machine learning group.

If even the ‘big players’ are struggling to make definite statements about the — let’s say 5-year — outlook on using quantum computers for machine learning tasks, should quantum computing startups like Xanadu get on board? We think the answer is yes and want to put three arguments forward:

- Early-generation quantum devices are promising newcomers to the growing collection of AI accelerators, thereby enabling machine learning.

- Quantum machine learning can lead to the discovery of new models and thereby innovate machine learning.

- Machine learning, and quantum machine learning in particular, will increasingly permeate all aspects of quantum computing, redefining the way we think about quantum computing.

Let us go through these points one by one.

1. Enable machine learning

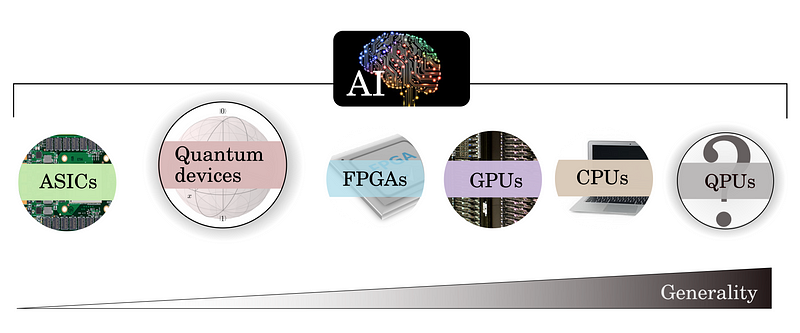

Early-generation quantum devices vary in their programming models, their generality, the quantum advantage they promise, and the hardware platforms that they run on. Across the board, they are very different from the universal processors that researchers envisioned when the field started in the 1990s. For machine learning, this may be a feature rather than a bug.

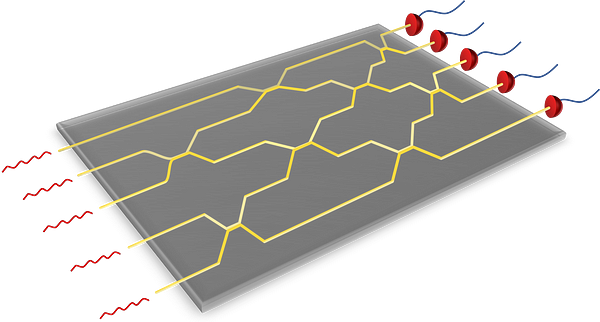

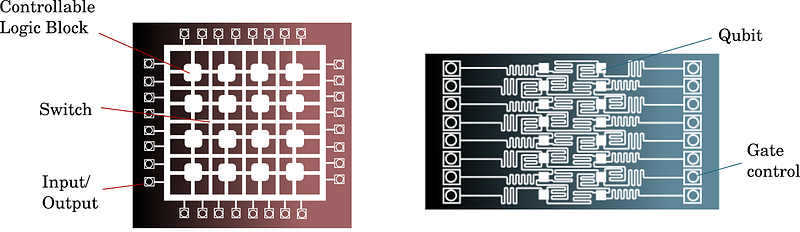

Quantum devices as special-purpose AI accelerators

Many current quantum technologies resemble special-purpose hardware like Application-Specific Integrated Circuits (ASICs), rather than a general-purpose CPU. They are hardwired to implement a limited class of quantum algorithms. More advanced quantum devices can be programmed to run simple quantum circuits, which makes them more similar to Field-Programmable Gate Arrays (FPGAs), integrated circuits that are programmed using a low-level, hardware-specific Hardware Description Language. In both cases, an intimate knowledge of the hardware design and limitations is needed to run effective algorithms.

ASICs and FPGAs find growing use in machine learning and artificial intelligence, where their slim architectures reduce the overhead of a central processor and naturally suit the task they specialize in. If current quantum technologies resemble this classical special-purpose hardware, they could find applications in machine learning in a similar fashion. And this even without universal quantum computing and exponential quantum speedups.

“Quantum technologies may eventually have a place in the mix of AI hardware as we develop newer and newer techniques to advance towards artificial general intelligence.”

Taking a look at the most advanced AI solutions reveals that they already use a blend of technologies. More and more computation is done on special-purpose devices located at the edge, where technology interacts with its environment (think of fingerprint recognition for unlocking a phone or smile detection in a camera). At the other end of the spectrum, calculations are done on GPU clusters (for instance, traffic routing or tagging photos). As a matter of fact, a modern GPU is already a technology blend in itself: the latest Volta chips by Nvidia include low-precision ASICs called Tensor Cores, designed specifically to accelerate the training of neural networks. Google follows a similar path with their Tensor Processing Units (TPUs) that are designed to support the TensorFlow machine learning framework. In short, AI has already embraced heterogeneity. Quantum technologies may eventually have a place in the mix of AI hardware. And this mix has to be as strong as possible if we want to advance towards artificial general intelligence.

Finally, hardware can significantly shape the advancement of software. In the 2010s, the use of GPUs contributed to the renaissance of neural network models (that have been around for decades but were largely discarded as untrainable). Similarly, accelerating quantum technologies could make their very own contribution to lifting specific machine learning methods into the realm of the doable, or even of the cutting-edge. This is particularly true for methods that are considered too hard to train with classical hardware and which were superseded by more convenient competitors.

What quantum computers are good at

If early-generation quantum devices can be thought of as special-purpose AI accelerators, what exactly can quantum computers contribute to machine learning and AI? Why would we want to use “quantum ASICs”? Let’s look at a selection of exciting candidate tasks, namely optimization, linear algebra, sampling, and kernel evaluations.

Optimization. Just like in machine learning, optimization is a prominent task in quantum physics. Physicists (and quantum chemists) are typically interested in finding the point of lowest energy in a high-dimensional energy landscape. This is the basic paradigm of adiabatic quantum computing and quantum annealing. To no surprise, one of the first tasks for quantum computers investigated in the context of machine learning was optimization. The D-Wave quantum annealer, a special-purpose device that can solve so-called quadratic unconstrained binary optimization problems, was used as early as 2008 to solve classification tasks. More recently, the hybrid quantum-classical technique of variational circuits has been proposed. There, a quantum device is used to evaluate a hard-to-compute cost function, while a classical device performs an optimization based on this information.

Linear Algebra. When speaking about potential exponential quantum speedups for machine learning, people usually refer to the inherent ability of quantum computers for executing linear algebra computations. There are many subtleties to this claim, and its prospect with regards to hardware in the near term is not always clear. One of the bottlenecks is data encoding: to use a quantum computer as a kind of super-fast linear algebra enabler for large matrix multiplications and eigendecompositions (not unlike TPUs), we have to first “load” the large matrix onto the quantum device, a procedure that is highly non-trivial.

However, there may be near-term benefits in understanding quantum computers as fast linear algebra processing units. Mathematically speaking, a quantum gate executes a multiplication of an exponentially — or even infinitely — large matrix with a similarly large vector. Specific costly linear algebra computations — namely those corresponding to quantum gates — can be therefore be done in a single operation on a quantum computer. This perspective is leveraged when building machine learning models out of quantum algorithms, for example when we think of an quantum gate as a (highly structured) linear layer of an enormous neural network.

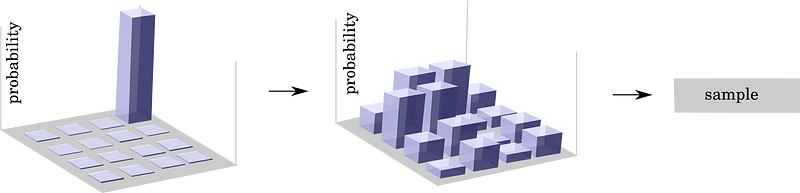

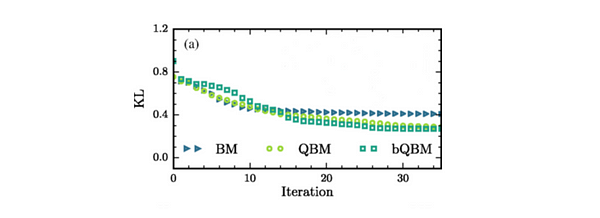

Sampling. All quantum computers can be understood as samplers that prepare a special class of distributions (quantum states) and that sample from these distributions via measurements. A very promising avenue is therefore to explore how samples from quantum devices can be used to train machine learning models. This has been investigated for Boltzmann machines and Markov logic networks, where the so called Gibbs distribution — which is inspired by physics and hence comparably easy to realize with a physical system — plays an important role.

“We should think of early-generation quantum computers as small, partially programmable special-purpose devices that can take over costly jobs for machine learning which naturally suit them.”

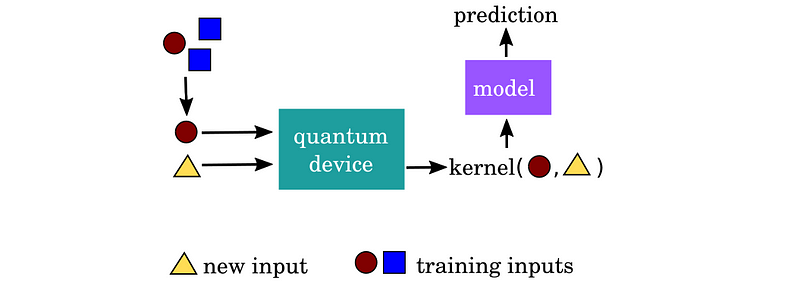

Kernel evaluation. One very recent idea from Xanadu illustrates how there are more specific tasks in machine learning that could be taken over by quantum devices. Kernel methods use machine learning models based on a distance measure between data points which is called a kernel. Quantum devices can be used to estimate certain kernels, including ones that are difficult to compute classically. The estimates from the quantum computer can be fed into a standard kernel method — such as a support vector machine. Inference and training are done purely classically, but augmented with the quantum special purpose device.

In summary, we should think of early-generation quantum computers as small, partially programmable special-purpose devices that can accelerate certain tasks in machine learning, just like the way GPUs enabled deep learning.

2. Innovate machine learning

Besides enabling pre-existing machine learning techniques, quantum machine learning potentially has a lot more to offer. Recently, a growing number of physicists trained in the methods of quantum theory and quantum computing have begun to think about machine learning. Having physicists enter machine learning has proven fruitful in the past — just think of the physicist John Hopfield who introduced his intimate knowledge of the Ising model into machine learning and created what is now known as associative memory in Hopfield networks. Quantum computing can lead to entirely new machine learning models. These new models are tailor-made for quantum devices, and may turn out to be something that works well, but which the machine learning community has simply never thought up. Let us demonstrate this with two examples that are actively investigated in quantum machine learning.

Sampling from quantum distributions

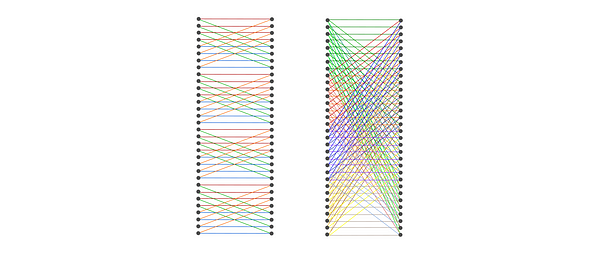

It was mentioned above that quantum devices are good at sampling. For example, quantum annealers can be used to approximately sample from a Gibbs distribution to train Boltzmann machines. But this is not straightforward, since the quantum device actually prepares a quantum Gibbs distribution. Instead of trying to “make things classical”, researchers investigated what happens if we use the natural quantum distribution. It turns out that in some cases, “quantum samples” can be very useful for training, as shown in the figure on the left.

“Discovering new machine learning models is similar to searching for gold on a yet unknown island. In the case of quantum machine learning, we have found some promising signs of gold at the first beach, which is why we are building better expedition gear and venturing further — excited by what we might find.”

Variational quantum circuits

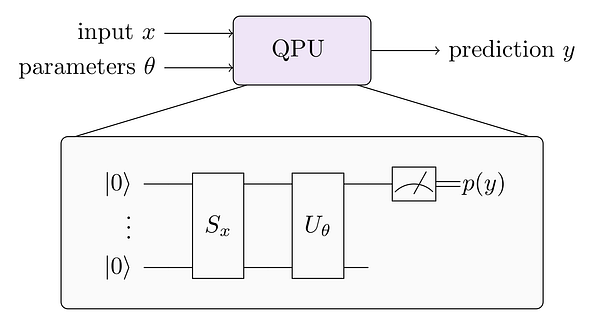

As a second example, consider a programmable quantum device — where “programmable” refers to some device parameters that can be tuned to change the specifications of an otherwise fixed computation. We set some of these parameters to the values of input data x, and associate other parameters as trainable variables θ. The device ultimately gives us some outputs y = f(x, θ) that depend on inputs and variables. Such a quantum device (and this description is really very generic) implements a supervised learning model. This model is sometimes called a variational classifier, relating it to the concept of variational (i.e., trainable) quantum circuits. In a similar way we can construct unsupervised models.

The function f that the quantum device computes can be very specific to its hardware architecture, how parameters enter the computation, and how one relates variables, inputs, and outputs to the quantum algorithm. However, altogether we get a “quantum model”. Importantly, if we do not know how to simulate the quantum model with a classical computer, we have not only a new ansatz to do machine learning, but also one that can only be executed with a quantum device. The emerging literature on variational circuits shows how to train such “hardware-derived” models with classical computers, and groups around the world are currently busy investigating the power and limits of such quantum models.

Discovering new machine learning models is similar to searching for gold on a yet unknown island. In the case of quantum machine learning, we have found some promising signs of gold at the first beach, which is why we are building better expedition gear and venturing further — excited by what we might find.

3. Redefine the way we think about quantum computing

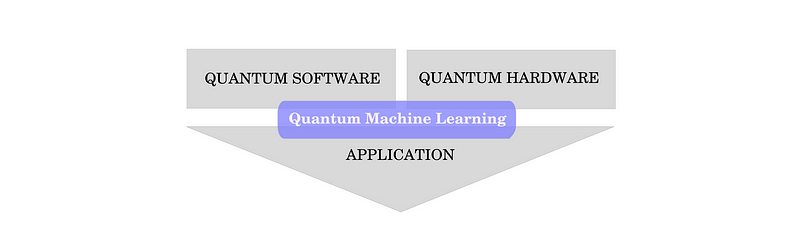

There is a third, more “behind-the-scenes” reason why we think that quantum machine learning is essential. Quantum machine learning, and its central subtask, optimization, are not only subfields of quantum computing, they are increasingly becoming approaches to quantum computing itself. As such, they have the potential to redefine the way we think about quantum computing. This holds for software design, hardware development and applications that rely on quantum computing.

Quantum software design

So far, quantum algorithms are carefully composed by people who have a deep knowledge of the tricks of the trade. And even the “bible” of quantum computing, the textbook of Michael Nielsen and Isaac Chuang, remarks that “coming up with good quantum algorithms seems to be a difficult problem”. But quantum algorithms could also be learned.

Consider for example the preparation of resource states. Resource states feature widely, i.e., in continuous-variable applications or error correction with magic states, where the computation relies on a specific state to be prepared as an input. Oftentimes, the algorithm to prepare the initial state is unknown. However, given a device with a certain type of gates, we can let the computer “learn” a gate sequence that prepares the desired state on the specific hardware. Likewise, entire quantum experimental setups (i.e., to generate highly entangled quantum systems) have been designed by machine learning approaches.

“The ideas of machine learning can transform the way we do quantum algorithmic design: Instead of composing algorithms, we can let the device learn them.”

Quantum hardware development

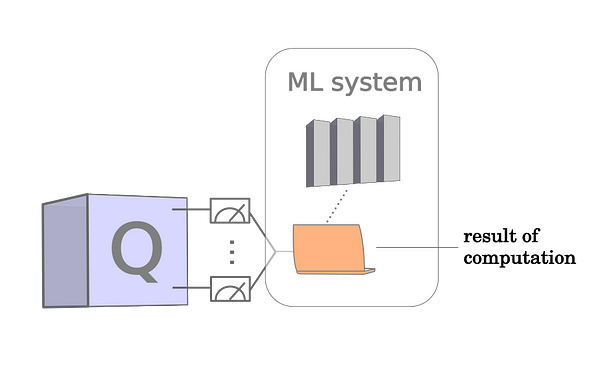

Intimate knowledge of machine learning can also help to build a quantum computer. Building a quantum computer will generate large amounts of labeled and unlabeled data. For example, data is generated when reading out the final quantum state of the device, when assessing the performance of gates, or when it comes to estimating measurement results. Quantum machine learning has a very active subfield, in which classical machine learning is used as a method to make sense of data produced by quantum experiments in the lab. Machine learning systems could easily become a standard component of quantum hardware.

“Machine learning may one day be a standard technique to read out the results of a computation with a quantum device.”

Applications

Quantum machine learning techniques are also closely tied with a variety of application areas. Quantum chemistry, for instance, is likewise interested in minimizing high-dimensional and difficult cost functions, e.g., to find the lowest energy configurations of molecules for drug discovery or material science. Quantum computers can be used to tackle these problems, with methods like the variational quantum eigensolvers mentioned above (see for example this recent result which scaled to a water molecule on an extremely noisy quantum computer).

Since machine learning and quantum chemistry are both heavily based on optimization, it is not surprising that they can both leverage similar quantum algorithms. A variational quantum eigensolver is, in essence, the same algorithm as a variational classifier, which was introduced as an innovative way to use quantum computers for machine learning. Understanding gained from the machine learning side will translate to new insights on the chemistry side. Good quantum machine learning algorithms will therefore have immediate consequences for other quantum applications based on data and optimization.

In summary, the potential of quantum machine learning to enable and innovate future AI applications, as well as to contribute towards the development of the field of quantum computing itself are three reasons why quantum machine learning may have a big future when it comes to small-scale quantum devices.

This opinion piece is signed by: Maria Schuld, Nathan Killoran, Thomas Bromley, Christian Weedbrook, Peter Wittek

No comments:

Post a Comment